Google announced on Monday that it will require advertisers to disclose when their election ads contain digitally altered content depicting real or realistic-looking people or events. This measure aims to combat misinformation during elections.

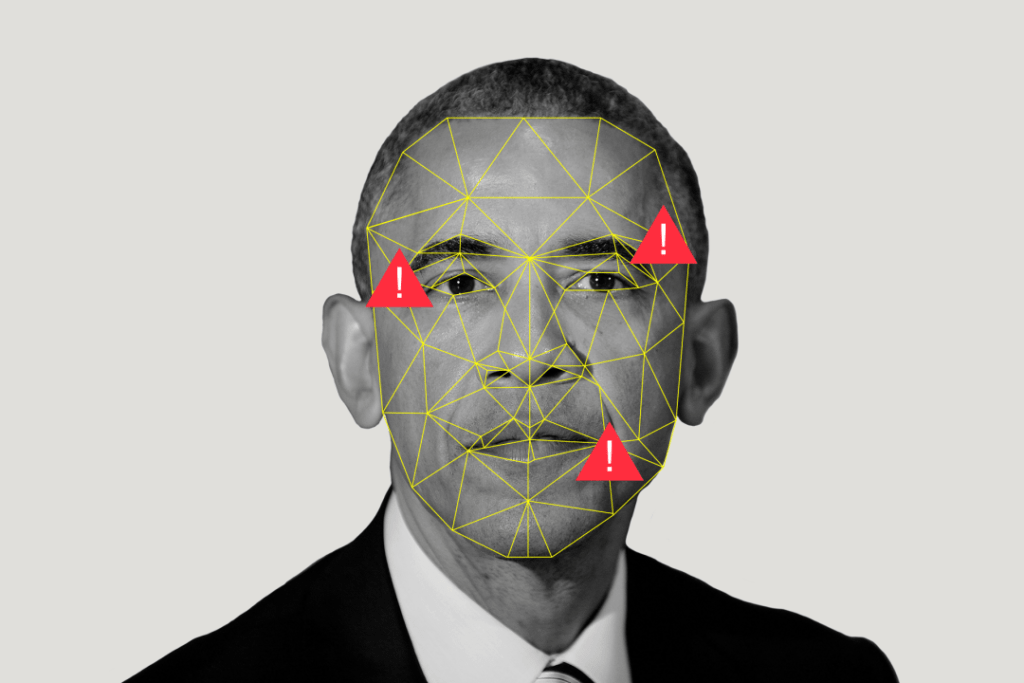

The updated policy mandates that advertisers check a box in the “altered or synthetic content” section of their campaign settings. With the rise of generative AI, which can quickly create text, images, and videos, concerns about misuse and deepfakes—manipulated content that misrepresents someone—have grown.

Google will provide an in-ad disclosure for feeds and shorts on mobile phones and in-streams on computers and televisions. For other formats, advertisers must include a “prominent disclosure” noticeable to users. The acceptable disclosure language will vary based on the ad’s context.

This move follows incidents such as the spread of fake AI-generated videos in India’s recent general election, which falsely depicted Bollywood actors criticizing Prime Minister Narendra Modi and endorsing the opposition Congress party.

Additionally, OpenAI disclosed in May that it had disrupted five covert influence operations attempting to use its AI models for deceptive activities to manipulate public opinion or influence political outcomes.

Similarly, Meta Platforms announced last year that it would require advertisers to disclose if AI or other digital tools were used to alter or create political, social, or election-related ads on Facebook and Instagram.

These actions by major tech companies highlight the ongoing efforts to ensure transparency and integrity in political advertising amid the growing influence of AI technologies.